As organisations increasingly deploy AI-driven applications – chatbots, virtual assistants, and user-generated content platforms – one of the most critical concerns is content safety. How do you ensure that an AI-powered system does not produce or facilitate harmful, hateful, violent or self-harm-promoting content?

For large enterprises building product solutions, integrating robust moderation mechanisms is now imperative.

As of today, OpenAI has launched a major upgrade to its moderation toolkit: a new and improved moderation endpoint designed to help ai developers detect undesired content – free to OpenAI API users, faster, more accurate, and more enterprise-ready. This blog explores what the tool offers, why it matters to large companies, how you should approach use and integration, what the trade-offs are, and how Accelerai supports clients adopting these systems.

What’s New: Core Features of the Moderation Endpoint

Here are the key enhancements in OpenAI’s updated moderation tooling:

Faster & more accurate classifiers:

The endpoint uses GPT-based classifiers trained specifically to detect undesired content (sexual, hateful, violent, or self-harm) more reliably than previous filters.

Free access (for OpenAI API-generated content):

If you generate content via the OpenAI API, you can use the moderation endpoint without additional cost, enabling monitoring with minimal overhead.

Unified API call for moderation:

Rather than building your own classifier or integration logic from scratch, you can call a single endpoint to assess text content for policy categories – accelerating time-to-market.

Robust across varied applications:

The endpoint has been built to perform across diverse use cases (chatbots, user posts, messaging systems) and clients, enabling enterprises to deploy it in different contexts and at scale.

Released evaluation dataset and technical paper:

OpenAI made available a dataset and research paper describing training methodology and evaluation results, which aids transparency and allows rigorous assessment by enterprises.

These enhancements make it more feasible for organisations to deploy AI systems while maintaining safety and governance standards.

Why It Matters For Enterprises

For large companies implementing AI-powered solutions, the availability of a strong moderation endpoint like this has several implications:

Risk Mitigation and Brand Protection

Without reliable moderation, an AI application could inadvertently generate or facilitate content that damages brand reputation, causes user harm, or triggers regulatory or legal consequences. Having a dedicated moderation tool reduces that risk.

Faster Time to Production with Safety Built-in

Instead of spending months building, training and validating custom moderation classifiers, many enterprises can leverage this endpoint to embed safety more quickly – allowing product teams to focus on core functionality rather than infrastructure.

Enabling Sensitive or Regulated Use Cases

Use cases in education, healthcare, enterprise internal assistants, or regulated user-generated content platforms often require high trust. With improved moderation, such deployments become more viable.

Vendor & Ecosystem Evaluation

When selecting AI vendors or building partner solutions, one of the evaluation criteria should be moderation capability. Firms that embed this endpoint (or equivalent) or allow hook-ins for moderation will stand out.

Scale and Governance

Enterprises often require auditing, logging, monitoring, and governance over AI behaviour. A moderation endpoint that integrates with existing systems and carries research-backed claims facilitates building governance frameworks around AI systems.

Key Considerations & Trade-Offs

While the new moderation tooling is a strong step, enterprises should still approach carefully and consider the trade-offs:

False positives & negatives: Even the improved classifiers will not be perfect. Some benign content may get flagged (“false positives”), and some harmful content may slip through (“false negatives”). Enterprises must design workflows to manage this (human review, logging, escalation).

Scope of moderation: The tool focuses on certain policy categories (sexual, hate, violence, self-harm). Enterprises must assess whether their domain has additional categories (such as financial advice, medical advice, privacy leaks, proprietary IP) that may require supplemental filtering.

Latency and throughput: Calling a moderation endpoint adds latency, especially at scale or in real-time interactive systems. Product teams must benchmark and architect accordingly (for example, asynchronous checks, batching).

Integration and coverage: Enterprises may have complex product architectures (multi-region, multi-language, multi-modal). They must evaluate whether the endpoint supports all relevant languages, volumes, and edge cases and what the coverage is.

Governance & auditability: Even though the endpoint offers classification, your organisation must build the audit trails, logs, incident response mechanisms, policy review processes, human-in-the-loop workflows and version control. Simply using the endpoint is not enough.

Dependence on vendor infrastructure: Relying on a third-party endpoint exposes you to availability risk, change management (if the vendor updates behaviour), and potential vendor lock-in. Ensure your architecture is resilient and you have fallback plans.

Deployment Roadmap for Enterprises

Here’s a recommended roadmap for integrating the moderation endpoint into your product/solution architecture:

1. Identify High-risk Application Flows

Map where generated content, user-input content or AI prompts/outputs occur in your system. Prioritise flows with high brand, regulatory or user-safety stakes.

2. Define Moderation Policy & Escalation Paths

Establish an internal moderation policy: what content is unacceptable, what thresholds trigger blocking vs review vs warning, who reviews flagged content, and response time SLAs.

3. Prototype Moderation Integration

Call the moderation endpoint in a sandbox/test mode. Send sample inputs (user text, generated text) through the moderation API and record classifications, scores and decisions. Identify false positives/negatives, and tune thresholds.

4. Design System Architecture

Pre-generation moderation: check user prompt/incoming content and decide whether to allow generation.

-Post-Generation Moderation: check the model’s output before surfacing to users, with the capacity to suppress or reroute.

-Human-in-the-loop workflows: for high-impact outputs, route flagged content for human review or manual override.

-Logging & Audit Trails: record prompt, output, moderation score, decision (allow/block/review), timestamp, user ID (if applicable), and model version.

5. Performance & Scale Testing

Benchmark latency, throughput, and cost. Ensure moderation calls don’t become bottlenecks in production. Design caching, batching or asynchronous fallbacks where necessary.

6. Monitor and Refine

After deployment, monitor flagged rates, user complaints, false positive/negative trends, model drift, and adverse incidents. Use these signals to refine thresholds, update workflows, and improve training data or prompts.

7. Governance & Compliance

Include legal, compliance, ethics, and product leadership in oversight. Maintain documentation of moderation policy, performance reports, incident logs and remediation efforts. Ensure alignment with data protection (e.g GDPR), consumer protection, and platform liability frameworks.

How Accelerai Supports Enterprises

At Accelerai, we partner with large organisations to embed safe, reliable AI systems. In the context of moderation, here’s how we help:

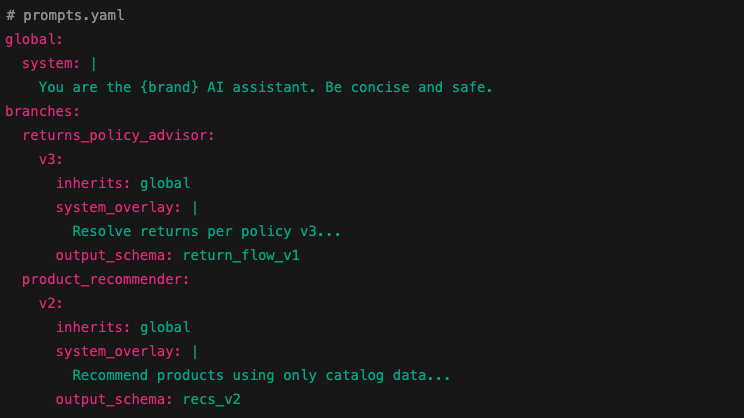

Policy-to-technical translation: We help you translate your corporate, regulatory or industry-specific content policy into technical implementation workflows using the moderation endpoint (and supplementary logic).

Architecture design & integration: We build the end-to-end architecture linking prompt flows, model generation, moderation checks, human-in-the-loop, logging and audit mechanisms.

Performance & security benchmarking: We test latency, throughput, reliability, multi-region and multi-language coverage to ensure the moderation solution meets production-grade enterprise standards.

Governance frameworks: We help establish oversight processes, dashboards, KPIs (such as flagged rate, review latency, user complaint incidence), incident response plans and audit trails.

Training & Operational Readiness: We provide training to product teams, content moderators and stakeholders on how to interpret moderation output, calibrate thresholds, manage escalation and handle incidents.

The launch of OpenAI’s upgraded Moderation endpoint in August 2022 is a meaningful advance for enterprises deploying AI-driven content or user interaction systems. It provides a tested, scalable, and cost-effective tool to embed content safety, reduce risks, and accelerate deployment.

However, the tool is just one part of a broader safety ecosystem – careful design, continual monitoring, governance and human oversight remain essential. For large organisations building AI products, combining the right moderation tooling with robust architecture and process will distinguish solutions that are trustworthy, scalable and compliant.

If your organisation is planning to deploy or scale AI systems with user-facing or content-generation components, now is the time to build moderation into your roadmap-not as an afterthought, but as a core component of your architecture and risk management strategy. At AccelerAI, we are ready to support you on that journey.