As organisations increasingly embed advanced AI capabilities into products and operations, the question of how to deploy safely becomes just as important as what we build. OpenAI has made clear that safety and alignment aren’t optional extras – they are intrinsic to how meaningful and responsible AI systems must be designed, developed and operated.

For enterprises looking to deploy AI at scale and integrate these capabilities into solutions, this is a wake-up call: you can no longer treat AI as simply a feature – it must be treated as a systemic component of your architecture, risk model and organisational governance.

What OpenAI’s Approach Means in Practice

OpenAI emphasises a simple but fundamental point: powerful AI systems should be subject to rigorous safety evaluations. They describe how, prior to the release of their latest model, they spent significant time working across the organisation to make the model safer and more aligned. Importantly, they highlight that lab-based testing has limits, and the real learning comes from real-world use.

From an enterprise viewpoint, this means that your deployment strategy must include three overlapping layers:

Pre-deployment assurance – This is the stage where models are evaluated: alignment testing, adversarial probing, policy checks, and scenario modelling. Human feedback loops, red-teaming, external expert review and robust monitoring are all part of this phase.

Real-world monitoring and feedback loops – Because you can’t anticipate every use case in the lab, there must be continuous monitoring once a system is deployed. Enterprises should therefore plan for logging, anomaly detection, feedback systems and human oversight baked into the live system.

Governance and regulatory engagement – Safety isn’t only a technical problem; it’s organisational and systemic. Regulation will be required, and enterprises must have governance, auditability, versioning, vendor scrutiny and compliance protocols in place.

Why These Principles Matter for Enterprise Products and Solutions

For large companies designing AI-enabled products or embedding AI into internal systems, the implications are profound.

Architectural decision-making now touches safety, reliability and future-proofing. If you’re building pipelines that depend on a model, you must assess how the provider handles safety, monitoring, version upgrades and fallback paths.

Risk management and reputation are also critical. An AI-driven product that fails safety or alignment checks – through bias, harmful outputs or misuse – can have severe consequences: regulatory fines, brand damage, litigation and operational downtime. Planning for safety up front becomes a competitive advantage.

Cost and scale implications can’t be ignored. Safety systems aren’t free. Monitoring, logging, human reviewers, fallback logic, and retraining all add cost. Enterprises must model not only the compute and storage cost of the AI model but also the full operational safety infrastructure.

Governance, audit and compliance will be expected as AI matures. Observable metrics, versioning of models, audit trails, and documentation of risk assessments will become standard requirements. Safety must be built into the system at all levels.

Finally, vendor and ecosystem strategy takes on a new dimension. Selecting a model provider is no longer only about capability or speed; it’s about safety support, transparency, alignment with enterprise governance, and long-term roadmaps.

Risks and Challenges That Remain

Even with a strong strategy, enterprises must recognise core risks and trade-offs.

Incomplete coverage of edge cases – No matter how much testing, you cannot anticipate all misuse or novel failure modes. Enterprises must build systems that can detect and respond to novel failures.

Vendor opacity and lock-in risk – Relying heavily on one model provider may limit flexibility and increase exposure to unknown shifts in behaviour or cost. Portability and exit options should be planned.

Escalating complexity and cost – As models become more capable, the cost and effort of monitoring, oversight and safe deployment grow significantly.

Regulatory uncertainty – Organisations must prepare for changes in regulatory regimes. Governments are moving toward more active oversight of AI, and enterprises must be ready.

Change management and version risk – Model upgrades may alter behaviours, performance or risk profiles; enterprises must plan for regression testing and fallback mechanisms.

Practical Steps for Enterprises

Here are actionable recommendations for product leads, solution architects and enterprise AI teams:

Inventory your AI-enabled systems – Know which products or workflows depend on models, what data they use, and where safety considerations must apply.

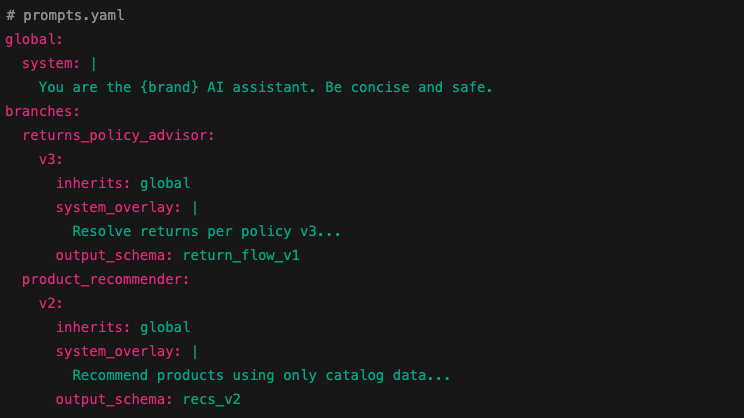

Build modular, version-aware architecture – Treat model endpoints as interchangeable modules, maintaining separation between model, prompts, governance logic, data pipelines and fallback flows.

Design for observability – Integrate logging, monitoring, alerting, and escalation from the start. Define metrics such as error rate, flagged items, user complaints and review latency.

Negotiate vendor terms – Ask model providers for safety documentation: how they test, monitor, and govern releases, and how they respond to incidents. Align contracts accordingly.

Define governance workflows – Create an AI safety oversight committee with representation from product, legal, compliance, security and engineering. Define review cadences, incident playbooks and audit processes.

Pilot with conservative use cases – Start with lower-risk workflows before deploying to high-impact or regulated domains. Use these pilots to stress-test governance and monitoring.

Plan for costs and scale – Estimate not only model usage costs, but also monitoring, human-review frameworks, fallback logic and governance overhead.

Learn AI Safety with Accelerai

AI safety may sound abstract, but its implications are deeply practical for enterprises building AI products and solutions. It underscores the reality that as AI becomes more capable and central to workflows, safety, alignment and governance must move from “nice to have” to “must have”.

Enterprises that treat safety as an operational discipline – embedding oversight, monitoring, governance and modular architecture – will be better positioned to deploy AI confidently and at scale. Organisations that don’t may find themselves exposed when the next generation of capabilities arrives.

At Accelerai, we partner with large organisations to prepare that path: from inventorying AI assets and designing safe architectures to negotiating with vendors, establishing governance flows, and monitoring operations.

If you’re looking to embed advanced AI safely into your product roadmap or enterprise solution stack, now is the time to build with safety in mind- not as an add-on, but as a core pillar.