In May 2023, Anthropic announced a significant upgrade to its Claude model: the ability to work with 100,000-token context windows. That is equivalent to roughly 75,000 words or several hundred pages of text.

This leap in “working memory” for a language model opens meaningful opportunities and also raises important considerations for large organisations building AI-enabled products and solutions.

For enterprises focused on product development, integrating AI into workflows or solving complex business problems, this advancement means you must rethink how you design, deliver and govern model-powered systems.

Rather than handling dozens of pages or simple prompts, your systems can now feasibly ingest entire documents, reports, code bases or multi-day conversation logs. This presents new capability but also new operational, technical and risk requirements.

In this blog we explore what the upgrade entails, how it impacts enterprise use cases, what you should watch out for, and how your organisation can position itself to take advantage.

What the 100K Context Window Upgrade Actually Means

Before this upgrade, many large language model (LLM) applications were constrained by context windows of maybe 8,000 to 16,000 tokens, meaning they could only “see” a limited slice of text at once.

With the announcement from Anthropic, Claude now supports input context windows of up to 100,000 tokens, roughly 75,000 words. That maps to hundreds of pages of content, such as reports, transcripts, code, or design documents that can be provided in a single call.

This expanded context enables several new operational modes. You can submit large corporate documents such as annual reports, mergers and acquisitions due diligence, legal contracts, entire code repositories, long customer service logs or multi-turn dialogues and have Claude reason over them without needing to chunk them manually.

Example applications include summarisation of dense documents, extraction of themes across many pages, deep Q&A over large content sets, and extended conversational flows that maintain context across hours or even days.

The model’s ability to handle this scale reduces the need to build complex upstream workflows such as chunking, stitching, and summarising before the prompt reaches the model. It becomes more direct.

For enterprises, that means fewer engineering “glue” layers, faster time to prototype, and more natural user experiences where a user might say, “Here’s our entire report; what are the key risks?” rather than breaking it into smaller pieces.

Enterprise Use Case Implications

Document-heavy environments

Industries such as legal services, financial institutions, consulting and insurance often deal with very large documents. Examples include contracts, regulatory filings, SEC 10K or 10Q reports, research papers, and due diligence packages. With a 100K token context window, Claude can potentially ingest an entire filing in one pass, extract key issues, summarise risk, compare across years, and support decision-making. That reduces manual effort and supports faster, more scalable workflows.

Codebase analysis and technical documentation

For organisations building developer tools or internal engineering assistants, being able to feed hundreds of pages of documentation or entire code modules into the model allows deeper reasoning, richer context, and fewer “context cutoff” errors. This helps with tasks such as code review, automating comment generation, generating designs or documentation, and supporting internal developer productivity platforms.

Extended Conversational Assistants

In customer service, enterprise help desk, or knowledge management contexts, where interactions span many turns, many documents, and many hours, having a model that can “remember” and reason over large contexts means that assistants can maintain coherence, refer back to prior content, and deliver richer user experiences. For example, a support bot could ingest a full service contract, historical tickets, and product documentation and handle a complex multi-step request seamlessly.

Strategic Analysis and Insight Generation

Product, strategy or marketing teams often need to scan large volumes of data such as competitor reports, market research, or internal performance logs. The 100K token window means you could feed all relevant documents to Claude and ask for high-level themes, comparative analysis and strategic insight without heavy manual preparation. For enterprises, this means faster insight cycles and more prototype-ready AI capability.

What Enterprises Need to Consider and Plan For

While the feature is exciting, enterprises need to plan carefully to integrate and govern these capabilities effectively. A few key considerations:

Architecture and Data Pipeline Readiness

Feeding large documents into a model means you need efficient ingestion, cleaning, metadata tagging, secure storage, and pipeline orchestration. You still need to handle data access, privacy, versioning and audit logs. The model’s expanded context does not remove those responsibilities; it shifts them upstream.

Performance, Cost and Scaling

Larger context windows can imply larger compute, latency, memory and cost. Enterprises should benchmark how long such queries take, what the token costs are for 100K token inputs, and what throughput you will need at scale. You will need to budget not just model calls but also infrastructure, caching, batching, fallback logic and engineering overhead.

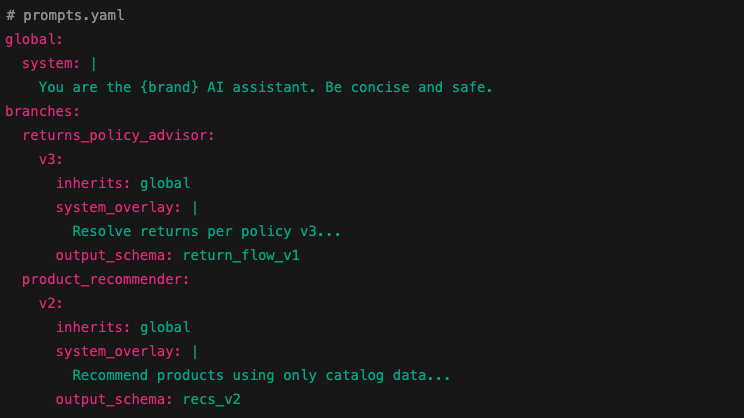

Prompt Engineering and Context Management

With greater context comes greater complexity. You must ensure that the large context is used effectively. That means prompt design to anchor the right sections of the context, metadata separation to indicate which parts are user uploads and which parts are system instructions, and ensuring that irrelevant information does not degrade performance. You will also need to design for versioning of documents, updates, and maintaining relevant context over time.

Governance, Auditability and Risk

Large context capability increases stakes. If you feed the entire company document repository and the model makes a recommendation, the risk of error or misinterpretation grows. Governance workflows must include review, human in the loop, logging of model inputs and outputs, version control of documents, and audit trails. Ensure compliance with internal policy, data protection laws and industry regulation.

Vendor and Model Provider Strategy

Not all model providers support such large context windows. Check whether a provider offers the feature, what limitations apply, what SLA is offered, and what revision or version changes are planned. Also consider portability. If you build on Claude with 100K context windows, how easily can you migrate or switch providers later?

Strategic Recommendations for Enterprise Deployment

Pilot with a high-value, document-intensive workflow

Choose a use case where large context input unlocks value, such as ingestion of annual reports, contracts, or research. Evaluate response quality, latency, cost, engineering effort and user experience.

Build your data ingestion and pipeline infrastructure

Set up secure upload, metadata management, document version control, cleaning and preprocessing. Ensure that you track provenance, retention, confidentiality and compliance.

Design prompt templates and context management strategies

Develop reusable prompt patterns for large context tasks such as summarising, extracting risks, and generating internal briefs. Establish guidelines for structuring context, managing document sizes, chunking if needed, and handling updates.

Establish monitoring, review and audit workflows

Log all large context queries, inputs, outputs, and user interactions. Build dashboards to monitor latency, token usage, flags, user corrections and errors. Route high-risk outputs for human review.

Cost model the solution holistically

Model token usage including input and output, compute cost, latency, engineering effort, review cost, and data pipeline cost. Compare to expected value, such as time saved, decision acceleration, or risk reduction.

Review vendor commitments and roadmap

Confirm with your model provider what context window limits apply, whether 100K tokens are generally available or limited, how upgrades are managed, what SLAs are in place, and how transparency is delivered.

Summary – 100k Context Windows

The announcement of a 100K token context window for Claude is a milestone for enterprise AI. It transforms what “large context” means and opens up workflows that were previously difficult or too manual.

For product builders and solution architects in large enterprises, this advancement offers real opportunities to accelerate insight, automate document-heavy tasks, support richer conversations and deliver higher-value AI integrations.

However, unleashing this capability successfully depends on thoughtful architecture, governance, pipeline readiness, cost control and vendor strategy. The ability to feed hundreds of pages into a model is just the start. The real challenge is using that capacity wisely and responsibly.

At Accelerai, we help large organisations navigate this frontier by designing pipelines, building prompt frameworks, establishing governance, cost modelling and vendor strategy.

If you are exploring how to deploy large-context AI in your organisation, now is the moment to prepare architecture and process, not just for what we can do today, but for what this capability enables tomorrow.