The NTIA released a Request for Comments on AI system accountability, inviting input on how a governing framework could support trustworthy AI deployment across sectors.

OpenAI submitted its response in June 2023, outlining what it views as the required building blocks for an accountability ecosystem around highly capable AI systems.

For large organisations developing AI-enabled products and solutions, OpenAI’s comment signals several key shifts. Accountability is no longer a peripheral concern; it is central to AI strategy. Model providers want to be part of the conversation.

Enterprises must treat AI governance and vendor strategy as integral to their technical rollout.

In this blog we unpack OpenAI’s commentary, draw out implications for enterprise product teams, highlight the risks and trade-offs, and suggest what organisations should plan now.

What OpenAI’s Comment Covers

OpenAI begins by welcoming the NTIA’s framing of AI accountability as an “ecosystem” issue, meaning solutions must work across domains and across providers and address numerous types of deployment.

OpenAI emphasises that accountability must include horizontal elements common across all AI systems and vertical elements tailored for specific sectors such as healthcare, finance, and education.

The company stresses that it is and will be engaged in developing and deploying highly capable foundation models, general-purpose systems trained on large datasets and able to perform many downstream tasks.

OpenAI argues these systems require special attention, regardless of the particular domain in which they will be applied.

OpenAI notes that existing laws already apply to AI products and that the regulatory landscape is evolving, for example with new initiatives in the United States and the upcoming European Union AI Act.

Importantly, OpenAI views sector-specific rules for medicine, education, or employment as critical components of the accountability ecosystem since domain expertise is needed for effective governance.

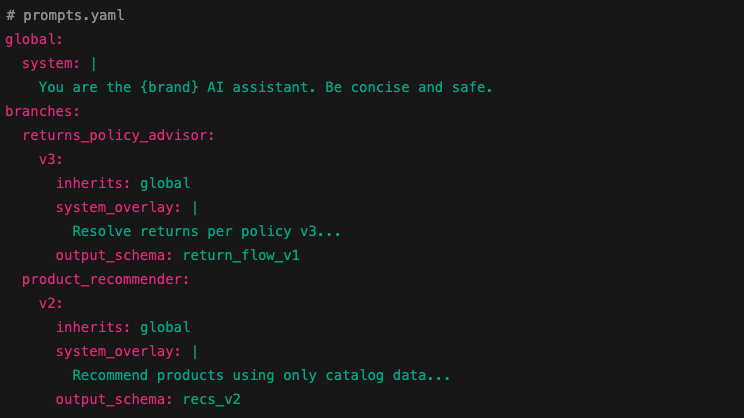

In its comment, OpenAI outlines practices it currently applies, from pre-deployment testing, red teaming, monitoring, and usage policies to post-deployment review, and encourages the development of industry-wide standards and frameworks.

It also signals that new rules should carefully consider highly capable foundation models to preserve innovation while ensuring responsibility.

Why This Matters for Enterprises Developing AI Products

For enterprises building AI-driven products, the commentary has several practical implications. First, it highlights that your vendor’s governance practices matter. If you are integrating a foundation model, you should expect robust vendor documentation of testing, auditing, usage policy, alignment, and monitoring.

Second, architecture and deployment planning must incorporate accountability mechanisms from the outset, not as an afterthought. Enterprises must architect for logging, monitoring, human-in-the-loop review, version control, transparency, and escalation, and must do so across the model lifecycle.

Third, vendor strategy is key. Organisations need to assess whether a model provider is transparent, provides audit logs, supports portability, and aligns with emerging policy frameworks.

OpenAI’s call for sector-specific tailoring means enterprises in regulated industries must evaluate compatibility with those domains, such as healthcare compliance or financial advice regulation.

Fourth, cost and product timelines will be impacted. Building these accountability mechanisms adds engineering, governance, monitoring and legal overhead.

Organisations must budget not just for model usage but for the full accountability infrastructure, such as human review, audit logs, and compliance reporting.

Finally, risk management and reputation are elevated. When a large enterprise deploys AI at scale, the expectation from regulators, customers, and the public is higher.

OpenAI’s comment reinforces the idea that advanced models require careful and safety-focused deployment. Enterprises that ignore this may face regulatory or reputational consequences.

Core Considerations and Trade-offs

OpenAI’s commentary also prompts enterprises to consider key trade-offs:

Innovation versus oversight: OpenAI argues for regulation per capability but also warns that overly broad rules may stifle innovation.

Enterprises must decide how much vendor flexibility they accept versus how much governance they require.

Horizontal versus vertical controls: While horizontal standards such as audit logs and usage policy are efficient, vertical rules may be more effective. Enterprises must design governance accordingly.

Vendor lock-in risk: If a vendor builds governance-heavy features but is less portable, you may face lock-in. Enterprises should ask about model upgrade paths, portability, data access and audit history.

Lifecycle responsibility: OpenAI emphasises that accountability spans the full lifecycle of the system, from design to decommission. Enterprises must maintain oversight long after initial deployment.

Transparency versus business secrecy: To be accountable, model providers and enterprises may need to share logs, audit results, and usage data. At the same time, business concerns, intellectual property and security may limit sharing.

Enterprises must balance openness with confidentiality.

Actionable Steps for Enterprises

Here are some recommendations for product owners, solution architects and enterprise AI teams:

-Assess your vendor’s accountability readiness.Ask whether the provider publishes system cards, developer logs, testing results, and human-in-the-loop case studies. Determine if they treat foundation models differently.

-Build your AI governance framework now. Set up an AI accountability committee, define roles, audit schedules, review workflows, incident escalation, user complaint pipelines and model version tracking.

-Integrate accountability into product architecture. Design your product stack so that model usage, prompts, responses, metadata, user feedback, and change logs are all captured. Incorporate monitoring, human-in-the-loop overrides, and transparency.

-Tailor governance to your domain. If you operate in finance, healthcare, regulated utilities, manufacturing or the public sector, overlay domain-specific controls such as medical device regulations, financial advice rules, and safety incident workflows on top of horizontal AI governance.

-Budget for accountability infrastructure. When modelling cost, include model usage, audit log storage, review workflows, human moderators or reviewers, regulatory documentation, compliance engineering, and vendor governance overhead.

-Monitor regulatory developments and align vendor strategy. The NTIA RFC is just one step. Watch for updates from governments and international agreements. Ensure your vendor can adjust.

-Pilot accountability-centric deployments. Before full rollout, run a pilot with full logging, review, monitoring and transparent user impact analysis. Use this to refine your accountability workflows and build confidence.

Summary with Accelerai

OpenAI’s June 2023 comment to the NTIA on AI accountability is more than policy boilerplate. It sends a clear message to the enterprise world: as AI capabilities advance and foundation models become central to product development, accountability is now critical infrastructure.

For large companies building AI systems, this means you must design not only for feature delivery but also for governance, transparency, monitoring, vendor strategy and lifecycle oversight.

If you treat accountability as a side constraint, you risk lagging behind. But if you embed accountability in your product strategy, you build operational resilience, regulatory alignment and trust, which ultimately becomes a competitive advantage.

At Accelerai, we help enterprises map this complex terrain, from evaluating vendor ecosystems and establishing governance frameworks to integrating accountability into system architecture and cost modelling. If you are planning to deploy AI at scale, now is the time to build for accountability, not just capability.