Anthropic announces Claude, its new AI assistant and model family designed with emphasis on helpfulness, honesty, and harmlessness.

Claude is positioned not simply as a conversational agent but as a general-purpose text and reasoning engine that enterprises can embed into applications – from summarisation and search to writing assistance, coding, Q&A, and more.

For large organisations seeking to adopt or build AI-driven products, Claude represents a compelling alternative in the evolving landscape of foundation models.

What Is Claude?

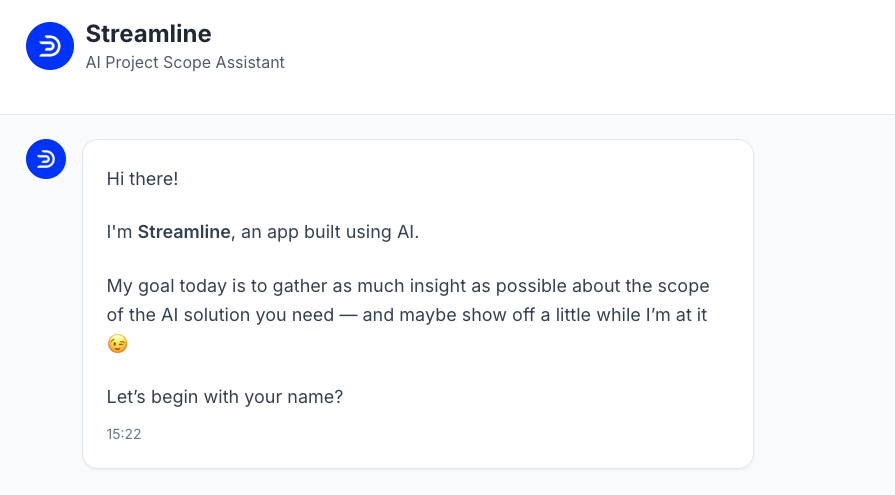

Claude is Anthropic’s AI assistant and model offering, available via a chat interface and via an API in its developer console. It aims to provide robust text processing and conversational capabilities while reducing harmful outputs and improving controllability.

Key details include:

Variants: At launch, Anthropic offers Claude (higher-performance) and Claude Instant (lighter, faster, lower-cost). The idea is to allow tradeoffs between latency, cost, and capability depending on the use case.

Steerability & controllability: Claude is designed to allow users to influence personality, tone, and behaviour. It is more responsive to instructions on how to behave (not just what to do).

Reduced harmful outputs: Anthropic emphasises that Claude is less likely to produce undesirable or unsafe content, thanks to its training, safety mitigations, and guardrail mechanisms.

Versatility in tasks: Claude is intended to support summarisation, search augmentation, creative and collaborative writing, question answering, code assistance, and more.

Developer access: Through API access, enterprises can integrate Claude into their own products or services, routing conversational or text workflows through the model.

Core Capabilities & Differentiators

What stands out about Claude (as of its introduction) in the crowded AI assistant / LLM space:

Better safety & Predictability

One of Claude’s key selling points is its design for “helpful, honest, harmless” behaviour. That means fewer “hallucinations”, less generation of harmful content, and more stable behaviour across prompts. For enterprise use, that improves trust and reduces risk in deployment.

Steerability

Claude allows more precise direction over style, tone, and behaviour. For instance, you can ask it to adopt a “concise, neutral tone” or “friendly voice” better than in some models where output style is harder to control.

Performance Trade-offs Via Variants

Claude Instant is lower-latency and more budget-friendly, making it suitable for high-volume, lower-complexity use cases. The full Claude variant offers deeper reasoning or more nuanced output when needed.

Ease of Use & Integration

The chat interface is meant for exploration and prototyping, while the API is intended for embedding. This dual mode helps enterprises experiment and then scale.

Partner Integrations from Day One

Claude was already being tested in closed alpha with partners like Notion, Quora (via Poe), DuckDuckGo, and others. These integrations help validate the model in real settings before its broader launch.

Use Cases & Early Integrations

Claude’s launch was accompanied by a handful of partner integrations and use cases that illustrate how enterprises and products might leverage it:

Notion AI

Claude integrates into Notion as a writing, summarisation, or assistance layer inside a workspace – helping users draft or improve content directly where they’re working.

Quora / Poe

Through Quora’s Poe platform, users can interact with Claude in a conversational Q&A setting. Feedback suggests users appreciate the readability, clarity, and natural conversational feel.

DuckAssist with DuckDuckGo

Claude is being used to power “Instant Answers” in DuckDuckGo search results, generating natural language responses to queries using sources like Wikipedia. This shows how Claude might bridge search and generative response.

Legal / Contract Review (Robin AI)

A legal infrastructure business is using Claude to parse parts of contracts, suggest alternative phrasing, summarise complex passages, and translate legalese into more accessible language. Early feedback claims that Claude handles technical language well.

Education / Tutoring (Juni Learning)

Claude is deployed as a tutor in certain educational settings, helping students understand maths, reading comprehension, or symbol interpretation, delivering more natural explanations than earlier models.

As these examples show, Claude is being plugged into both content production workflows and domain-specific reasoning/augmentation tasks.

Challenges, Risks & Considerations

Even with a promising launch, there are important caveats and risks that enterprises must consider when evaluating Claude (or any LLM) in 2023:

Edge-case Behaviour & Hallucinations

No model is immune to mistakes. Claude may still generate incorrect, misleading, or nonsensical outputs, especially in unfamiliar domains or under adversarial prompts.

Bias & Fairness Risks

Claude’s training data and guardrails must be scrutinised; outputs may still unintentionally reflect demographic bias, stereotypes, or disparities across user groups.

Safety/Misuse Vectors

Malicious prompt engineering, jailbreaks, or adversarial attacks may find ways to override Claude’s safeguards. Enterprises must build additional layers of protection, moderation, or filtering.

Operational consistency & SLAs

Enterprise deployments demand high reliability, predictable latency, and uptime guarantees. As a new model, Claude’s support, SLAs, failover behaviour, and ecosystem maturity may not yet match incumbent providers.

Data privacy & compliance

Enterprises must understand how Claude handles user data, how logs are stored, whether training data is exposed or filtered, and whether compliance (such as GDPR) is satisfied. Data flows to/from the model must be auditable.

Integration Risk & Migration Cost

Building systems around Claude’s APIs or idiosyncrasies may create lock-in. If future models or vendors change interfaces, migrating or recomposing the stack may involve work.

Model Updates and Versioning

As Claude evolves, breaking changes or performance shifts may occur. Systems must plan for versioning, rollback, and compatibility during upgrades.

Guidance for Enterprise Adoption

Given the opportunities and risks, here’s a recommended path enterprises should follow if considering Claude:

Pilot / Proof of Concept

Begin with lower-risk workflows (customer service bots, internal assistants, and summarisation tools) to evaluate how Claude handles your domain, prompts, and data.

Benchmark Against Alternatives

Run side-by-side tests with other models (e.g., GPT variants, Claude-like systems) on your workloads to compare accuracy, cost, latency, hallucination frequency, and safety.

Domain-specific Testing & Adversarial Probing

Test with your use-case inputs, edge cases, ambiguous queries, and domain jargon. Push Claude to fail or misbehave to identify weaknesses.

Instrument and Log Thoroughly

Capture inputs, outputs, confidence, anomalies, and user corrections. Build dashboards and alerts on “unexpected” or “low-confidence” responses.

Human-in-the-loop & Fallback Logic

For critical tasks, do not allow Claude’s output to go live without review. Use human raters or editors, and provide fallback or safe default responses.

Versioning & Safety Rollback Plans

Maintain multiple model versions, with the ability to roll back if a newer version declines in quality or safety. Use canary deployments or AB tests.

Contractual Guarantees & Transparency Demands

When negotiating with Anthropic or intermediary providers, require assurances around data handling, uptime, support, auditability, and security.

Governance & Oversight

Involve legal, compliance, security, ethics, and domain experts early. Define acceptable error thresholds, mitigation strategies, and escalation paths for problematic behaviour.

Iterative improvement Use your live usage and feedback data to improve prompts, guardrails, or selection logic over time. Monitor for drift, bias, or emerging misuse patterns.

Summary – Claude’s Introduction

Claude’s introduction in March 2023 is a notable development in the enterprise AI space. By focusing on safety, controllability, and helpfulness, Anthropic is positioning Claude as a serious contender for mission-critical applications, not just conversational experiments.

For enterprises, Claude offers potential: a model you can steer, integrate, and trust more than many earlier generative systems. But the path to successful deployment is cautious: test thoroughly, govern tightly, instrument robustly, and build fallback or human oversight layers.