AI safety and reliability are fast becoming decisive factors for enterprise adoption. On April 29, 2022, Anthropic, a research company founded in early 2021, announced that it has raised $580 million in Series B funding to accelerate development of AI systems that are more steerable, interpretable, and robust.

For large organisations looking to build or integrate AI-driven products and solutions, this represents both an opportunity and a benchmark. In this post, we’ll unpack what Anthropic’s raise means, what they are doing, the implications for product development, and how companies like ours (and yours) can respond.

What Did Anthropic Announce?

Here are the key points from the announcement:

Funding

$580M in Series B. This follows a Series A of $124M in 2021.

Mission / Focus Areas

Steerability: Making models that can be more precisely directed or controlled in behaviour.

Interpretability: Being able to “look inside” what the model is doing: understand its behaviour, its decision pathways, pattern-matching behaviour, etc.

Robustness: Ensuring models behave reliably even in unexpected situations, reducing undesirable side effects, and improving safety.

Research Achievements to Date

-Work on reverse engineering smaller language models (transformer circuit analysis).

-Investigating sources of pattern-matching behaviour in large language models.

-Developing baseline techniques to make large LMs “helpful and harmless”.

-Applying reinforcement learning (RL) to improve alignment with human preferences.

-Publishing datasets to help external labs train models aligned with human preferences.

-Analysis of “sudden changes in performance” in large models and societal implications of those phenomena.

Infrastructure & Team Growth

Anthropic will use the funds to build large-scale experimental infrastructure, enabling them to do more compute-intensive safety/robustness experiments. They are also growing their team (around 40 people as of the announcement), with plans to scale further.

Governance, Culture & Policy

They emphasise culture, internal governance, and partnerships to explore the societal/policy implications.

Why This Matters for Enterprises

For companies like yours (large enterprises, product developers, solution providers), the Anthropic announcement has several implications:

Raised Bar for Safety & Interpretability

As AI becomes more central to mission-critical systems, stakeholders (regulators, customers, and internal risk/compliance teams) will demand more transparency, traceability, and behavioural guarantees. What Anthropic is doing pushes the frontier. Tools/products that don’t address these concerns may meet resistance or regulatory friction.

Opportunities for Partnerships & Access

Enterprises may find opportunities to partner with or adopt frameworks, datasets, or methods from companies like Anthropic as they open up safety- and interpretability-focused resources. Using state-of-the-art datasets for alignment or interpretability could become a differentiator.

Necessity of Experimental Infrastructure

To build or integrate AI systems that are robust and steerable, organisations will need comparable experimental environments: compute, monitoring, evaluation, safety testing, etc. It’s not enough to train a model; one needs tooling to evaluate behaviour in edge cases, adversarial inputs, catastrophic failures, context shifts, etc.

Regulation & Societal Relevance

With growing public and regulatory attention on AI risk, bias, misuse, emergent behaviour, etc., being seen as doing AI “responsibly” can affect licensing, public trust, and market access. Anthropic’s focus on policy/societal impact signals that this is not just a technical issue but a strategic business issue.

Competitive Pressure

For enterprises building AI products, if competitors or vendors start making claims about safety, interpretability, explainability, etc., customers will increasingly expect those features. You either build them in or risk losing credibility.

Risks, Challenges & Things to Watch

While the promise is strong, several challenges remain for enterprises considering building or integrating such robust, steerable systems:

Cost and Scale

Achieving safety and interpretability at a large scale can be computationally and financially expensive. Large models plus safety-orientated evaluation are resource heavy.

Unknown / Emergent Behaviour

Even with good interpretability tools, models may exhibit emergent behaviours not foreseen in training or testing, especially when scaled.

Trade Offs

Sometimes there is tension between performance (e.g., raw accuracy or capabilities) and safety/interpretability/constraints. Enterprises must decide what tradeoffs they are willing to make.

Regulatory & Ethical Complexity

Different jurisdictions may have different rules about AI transparency, safety, and liability. Ethical expectations from customers/the public may differ. Navigating this is nontrivial.

Talent & expertise: Research into interpretability, safety, and steering behaviour is still relatively new; domain expertise is scarce. Hiring and retaining people who can do this well will be a challenge.

How Accelerai Helps

At Accelerai, we understand that building safe, interpretable, and robust AI is no longer optional – it is a strategic imperative. Here’s how we help our clients navigate and capitalise on this evolving landscape:

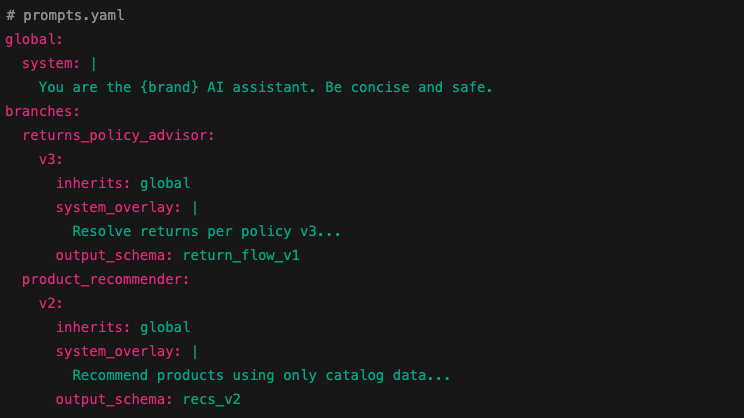

We can help design architecture that incorporates interpretability and governance from the start: choosing model types, planning for monitoring, embedding feedback loops, and identifying failure modes.

We support building the necessary infrastructure for experimentation: safety testing, adversarial robustness, stress testing, and data collection for human alignment.

We bring skills in productising research: converting interpretability / steering / robustness advances into usable tools or capabilities in your product stack.

We advise on governance, compliance & risk assessment, helping you plan organisational culture, oversight, and policy alignment.

We keep you aware of research-frontier developments (e.g., what Anthropic and others are doing), so we can help you adopt best practices early.

Conclusion

Anthropic’s successful $580M Series B round is a signal that investors, researchers, and product makers alike see the urgency and value in AI systems that are not just capable but safe, steerable, interpretable, and robust.

For enterprise organisations, this should serve both as a warning (the bar is rising) and an opportunity (align early to lead in trust, safety, and reliability).

At the end of April 2022, what matters is preparing: building pipelines, teams, governance, tools, and mindset so that when you build or deploy AI systems, you do so confidently, with safety, accountability, and resilience built in.