Late in 2022, OpenAI made public its conversational AI assistant ChatGPT- a major milestone in generative AI. Built from a fine-tuned version of its GPT-3.5 series, ChatGPT is designed to engage in human-like dialogue, answer follow ups, admit mistakes, challenge incorrect premises, and reject inappropriate requests.

For enterprises building AI-enabled solutions, ChatGPT opens up significant possibilities in product innovation, automation, customer service and user experience – but it also raises important questions around governance, reliability, cost, vendor dependency and risk.

What Is ChatGPT and How It Works

ChatGPT is a conversational AI model fine-tuned using reinforcement learning from human feedback (RLHF) on top of the GPT-3.5 model family. The dialogue format means users can ask follow-up questions, refine their prompts in conversation, and get more interactive, coherent responses than earlier generation models.

Key functional points include:

Conversational interface: Rather than one-shot completion, ChatGPT supports multi-turn dialogues and context carry-over.

Instruction following: The model is trained to follow explicit instructions (“Write a summary”, “Explain this to me like I’m five”) and respond accordingly.

Moderation and safety-orientated design: Filters and safeguards help ChatGPT decline inappropriate requests or respond in a safe manner.

Accessible via web interface (and soon APIs): At launch, ChatGPT was made widely accessible via the web without complex integration. Enterprises can experiment quickly.

Why This Matters for Enterprises & Product Development

For large organisations designing products, managing enterprise solutions or solving complex workflows, ChatGPT introduces a new building block. Here are the main implications:

- Rapid prototyping and automation of text-based workflows

From draughting reports, summarising internal documents, generating code snippets, automating customer support responses, or brainstorming ideas, ChatGPT allows enterprises to accelerate the development of conversational or text-centric features. - Improved user experience and accessibility

Integrating conversational AI into customer-facing applications, internal tools or employee assistants can raise engagement, reduce friction and enable non-technical users to access knowledge or perform tasks via natural language. - New product opportunities across domains

Enterprises can embed ChatGPT into vertical-specific applications: legal document assistants, technology support chatbots, financial planning helpers, HR self-service tools, and content generation platforms. The conversational interface becomes a differentiator for UX and efficiency. - Competitive differentiation & innovation pace

Early adopters of ChatGPT-powered features may gain first-mover advantage: faster content creation, smarter assistants, and more interactive user workflows. In markets where speed and user experience matter (media, e-commerce, banking), this can be significant. - Vendor & platform strategy implications

As enterprises evaluate AI vendors and platforms, the availability of ChatGPT signals a shift: conversational AI becomes more central. Organisations may need to reconsider their architecture, cloud providers, integration strategy and vendor lock-in risks accordingly. - Governance and compliance enabling

For enterprises operating in regulated industries (finance, healthcare, and the public sector), ChatGPT’s built-in moderation and instruction-following design provide a starting point for governance. However, the enterprise must still overlay its own policies, reviews, auditing and risk controls.

Practical Implementation Considerations

To successfully integrate ChatGPT into enterprise products or solutions, consider the following implementation-level steps:

Use-case definition: Identify workflows where conversational AI adds value (e.g., internal knowledge search, customer support, document draughting, code generation). Define scope and success metrics (latency, accuracy, user satisfaction, cost).

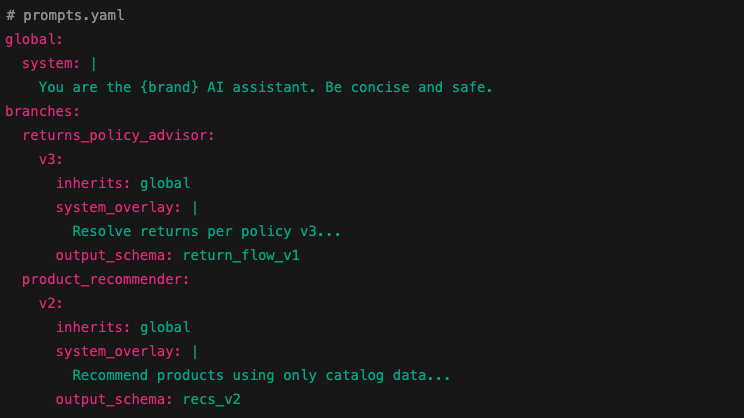

Prompt engineering & context design: Because ChatGPT behaviour depends heavily on prompt design and context, build reusable prompt libraries, test different instruction styles, and manage conversation history and context window.

Integration architecture: Determine how ChatGPT will be embedded: via web UI, custom app, API when available, or hybrid model with retrieval-augmented generation connected to internal documents or systems. Plan for latency, concurrency, access control, and input/output pipelines.

Data access, privacy and security: If ChatGPT accesses or processes enterprise data (documents, internal knowledge base, customer info), ensure encryption, data segmentation, compliance with data protection regulations, and contractual clarity with the vendor.

Monitoring, logging and feedback loops: Capture prompt, response, user edits, error rates, complaints, hallucinations and biases. Build dashboards for KPIs and analyse drift of performance over time.

Human-in-the-loop and fallback logic: For high-risk outputs (legal advice, financial recommendations, compliance documents), include escalation to a human reviewer, safe defaults, or post-processing.

Versioning and change management: Track which model version is in use, validate updates, run regression tests, and have fallback paths if responses degrade.

Cost modelling and usage management: Assess expected volume, cost implications (per-call or per-token charges when API is available), infrastructure scaling, and compute latency. Budget accordingly.

Vendor evaluation and contractual terms: Ensure the vendor provides SLAs, transparency around data usage and model updates, the ability to audit, and clarity on support and reliability.

Governance, ethics & responsible use: Establish internal oversight involving legal, compliance, risk, ethics, and security stakeholders. Set policies around acceptable use, user disclaimers, record-keeping and review.

Risks, Limitations & Governance Challenges

While ChatGPT opens many possibilities, enterprises must recognise limitations and manage risks:

Hallucinations and inaccuracies: Despite strong performance, ChatGPT may produce plausible-sounding but incorrect or misleading responses. All critical use cases need guardrails.

Bias and fairness concerns: Training data may embed societal biases; responses may reflect or amplify them. Enterprises should test across user demographics, prompt variations and domains.

Security, privacy and data leakage: If prompts include sensitive or proprietary data, there is a risk of exposure or retention by vendor systems. Clear contractual and operational controls are essential.

User trust and misuse risk: If deployed for customer-facing services, users may assume outputs are fully accurate or authoritative. Enterprises must manage disclaimers, design user interfaces appropriately and maintain human oversight.

Vendor dependency and lock-in: Relying on ChatGPT or its underlying model may limit portability between vendors or cloud providers. Enterprises should architect for flexibility where possible.

Regulatory compliance and auditability: Especially in regulated industries, you’ll need audit logs, versioning, traceability, bias testing, data governance, and transparency. New regulations around AI may soon apply.

Cost, latency, and scale limits: At enterprise scale, the volume of conversations, data throughput, latency requirements, and cost per call will all become significant.

Change management: As updates are rolled out, the behaviour of ChatGPT may change. Enterprises must manage upgrades and regression testing to ensure alignment with business requirements.

Strategic Recommendations for Enterprises

To make the most of ChatGPT in an enterprise context and mitigate risks, here are recommended strategic actions:

1. Start with a pilot

Launch a controlled pilot in a low-risk domain to validate performance, cost, monitoring and governance flows.

2. Map vendor & cloud strategy

Determine whether ChatGPT (and future models) aligns with your cloud/infrastructure strategy. Consider multi-cloud, fallback options, and portability to avoid lock-in.

3. Define governance frameworks

Establish cross-functional governance (product, legal, compliance, IT, security). Define policies, review cadences, incident-response plans and audit mechanisms.

4. Design for human-in-the-loop

Especially in customer-facing or regulated domains, ensure outputs are reviewed or supervised; design fallback logic and user disclosures.

5. Invest in monitoring & analytics

Build behaviour monitoring (errors, biases, flagged content, user satisfaction) and prompt-engineering dashboards. Define KPIs to measure impact.6.

6. Plan for scale and cost

Estimate usage growth, cost per conversation, and infrastructure overhead. Optimise prompts, reuse context, and manage token usage and throughput requirements.

7. Audit bias & fairness

Periodically audit the system across roles, demographics, geographies and use cases. Look for skewed outputs, persistent stereotypes, or demographic under-representation.

8. Design a future-proof architecture

Anticipate integrations with retrieval systems, tool-use APIs, or enterprise data pipelines. Design conversational systems to allow model swapping or upgrading with minimal disruption.

9. Engage the business & users

Ensure product stakeholders and business leaders understand both the capabilities and limitations of ChatGPT. Communicate clearly to set expectations.

ChatGPT with OpenAI

The debut of ChatGPT in December 2022 marks a watershed in conversational AI for enterprises. It offers a flexible, powerful platform for embedding conversational experiences into products, automating text workflows, and improving user engagement. But it also demands that organisations treat AI deployment with rigour: governance, monitoring, ethics, cost management, vendor strategy and human oversight must all be baked in.

Enterprises that adopt ChatGPT thoughtfully – balancing innovation with control – will be best positioned to leverage the promise of conversational AI while avoiding the pitfalls. At Accelerai, we help large organisations navigate these decisions, from pilot design and integration to governance frameworks, monitoring strategies and vendor evaluation. If you are planning to embed conversational AI in your product roadmap or solution architecture, now is the time to prepare.